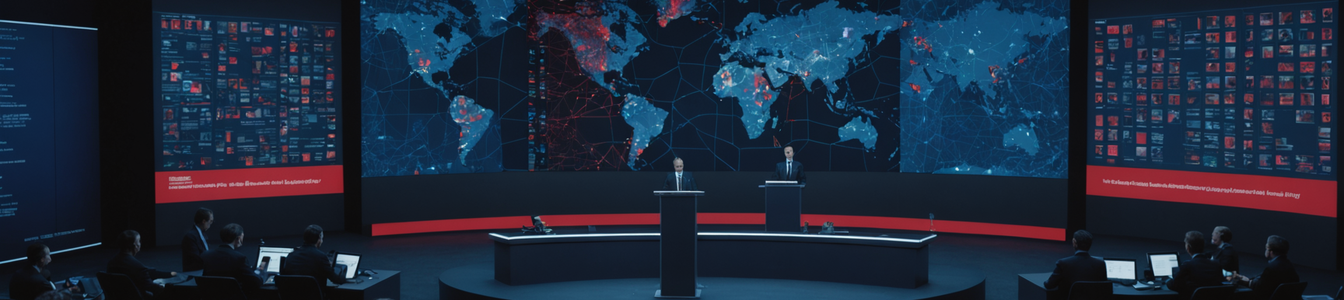

The emergence of AI-generated deepfake technology has ushered in a new era of political warfare, where sophisticated disinformation campaigns are targeting high-profile political figures with unprecedented precision. Recent incidents across multiple continents demonstrate the escalating threat these technologies pose to international relations and democratic processes.

In one particularly concerning case, fabricated images depicting former President Donald Trump and Russian President Vladimir Putin engaging in friendly interactions have circulated online, attempting to undermine Ukraine peace efforts. These AI-generated visuals show the leaders in seemingly cordial settings, complete with realistic facial expressions and body language that would be virtually indistinguishable from authentic footage to the untrained eye.

Simultaneously, Indonesia's Finance Minister Sri Mulyani Indrawati became the target of a malicious deepfake campaign that fabricated her making derogatory statements about teachers. The manipulated video, which spread rapidly across social media platforms, showed the minister allegedly calling teachers 'a burden on the state' – claims she immediately denounced as false and technologically fabricated.

Technical analysis of these campaigns reveals alarming advancements in deepfake technology. Modern generative adversarial networks (GANs) and diffusion models can now produce synthetic media that bypasses traditional detection methods. These systems use sophisticated neural networks that analyze thousands of hours of real footage to learn and replicate subtle facial movements, vocal patterns, and mannerisms specific to each target individual.

The cybersecurity implications are profound. Detection mechanisms that relied on identifying visual artifacts or inconsistencies in earlier deepfake generations are becoming increasingly obsolete. New approaches involving blockchain verification, digital watermarking, and AI-powered detection systems are being developed, but the rapid pace of technological advancement creates a constant cat-and-mouse game between creators and detectors.

From a geopolitical perspective, these campaigns represent a shift in information warfare tactics. State actors and malicious groups can now create convincing false narratives without traditional propaganda infrastructure, lowering the barrier to entry for influencing foreign elections and diplomatic relations. The potential for these technologies to trigger diplomatic incidents or manipulate stock markets presents clear national security threats.

The human factor remains critical in combating this threat. Public awareness and digital literacy campaigns are essential, as even the most sophisticated detection systems cannot prevent initial exposure to malicious content. Educational initiatives must teach citizens to critically evaluate digital media, verify sources through multiple channels, and recognize common manipulation techniques.

Industry response has been developing through collaborations between tech companies, academic institutions, and government agencies. Microsoft's Video Authenticator tool, Facebook's Deepfake Detection Challenge, and DARPA's Media Forensics program represent significant investments in counter-deepfake technology. However, the decentralized nature of AI development means offensive capabilities often outpace defensive measures.

Legal and regulatory frameworks are struggling to keep pace with technological developments. While some jurisdictions have implemented laws specifically targeting malicious deepfake creation and distribution, international consensus and enforcement mechanisms remain limited. The cross-border nature of these campaigns complicates prosecution and attribution.

Looking forward, the cybersecurity community must prioritize several key areas: developing real-time detection systems that can scale across major platforms, establishing international cooperation frameworks for attribution and response, creating standardized verification protocols for authentic media, and investing in public education initiatives.

The 71% of Americans who expressed concern about AI's impact on employment in recent surveys now face additional worries about AI's potential to undermine truth and democratic institutions. This public sentiment underscores the urgency with which governments and technology companies must address the deepfake threat.

As these technologies continue to evolve, the line between reality and fabrication becomes increasingly blurred. The cybersecurity community's ability to develop effective countermeasures will play a crucial role in determining whether deepfake technology becomes a manageable challenge or an existential threat to informed democracy.

Professional cybersecurity organizations recommend implementing multi-layered defense strategies that combine technical solutions with human vigilance. Regular training for government officials and journalists, investment in detection research, and international cooperation represent the most promising approaches to mitigating this rapidly evolving threat.

Comentarios 0

Comentando como:

¡Únete a la conversación!

Sé el primero en compartir tu opinión sobre este artículo.

¡Inicia la conversación!

Sé el primero en comentar este artículo.