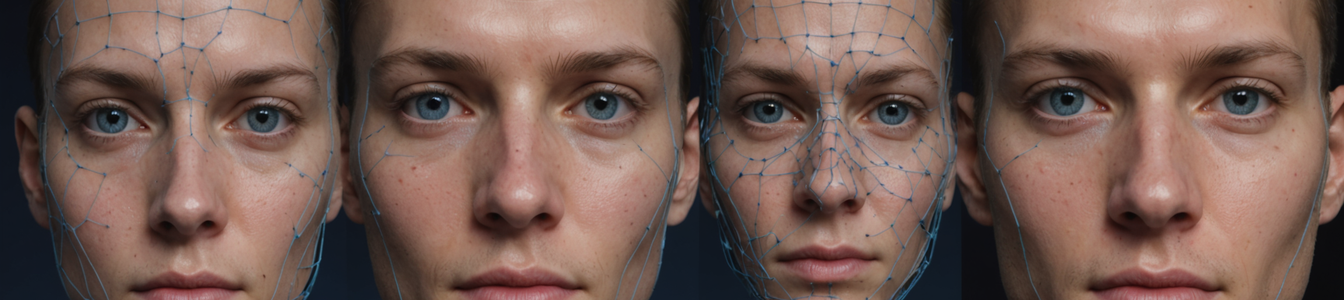

The deepfake crisis has entered a dangerous new phase, with recent cases demonstrating unprecedented sophistication in both political manipulation and celebrity exploitation. Cybersecurity analysts are sounding alarms as AI-generated content becomes indistinguishable from reality, with three particularly concerning trends emerging globally.

Political Resurrection Scams

Several nations have reported disturbing cases of deceased politicians being 'resurrected' through AI. In Belgium, a deepfake of a former prime minister was used to influence current policy debates, while similar incidents occurred in India and Indonesia. These hyper-realistic simulations leverage generative adversarial networks (GANs) to recreate voice patterns, facial expressions, and mannerisms with terrifying accuracy.

Celebrity Exploitation Goes Mainstream

Elon Musk's AI platforms have been implicated in creating non-consensual deepfakes of Scarlett Johansson and Taylor Swift. The technology, originally developed for legitimate entertainment purposes, has been weaponized to generate explicit content without consent. Security researchers note these tools require minimal technical skill, with malicious actors using cloud-based APIs to create convincing fakes in minutes.

Media Manipulation Reaches New Heights

CNN's Chris Cuomo recently fell victim to a deepfake parody video featuring Alexandria Ocasio-Cortez (AOC) discussing actress Sydney Sweeney. Despite being labeled as satire, the video's realism fooled both the veteran journalist and thousands of social media users. This incident highlights the erosion of trust in digital media and the urgent need for better verification tools.

Technical Analysis

Modern deepfakes utilize diffusion models that can generate 4K resolution video from limited source material. Cybersecurity firm Darktrace reports a 300% increase in deepfake-related incidents in Q2 2025 alone. The most concerning development is the emergence of 'zero-click' deepfake generators that require no coding knowledge.

Cybersecurity Implications

- Identity Verification Systems: Current biometric authentication may no longer be reliable

- Legal Frameworks: Most countries lack adequate laws against synthetic media

- Corporate Security: Executive impersonation attacks are rising sharply

Protective Measures

- Implement multi-factor authentication with liveness detection

- Train employees in deepfake recognition (look for unnatural blinking, audio sync issues)

- Advocate for digital watermarking standards in AI-generated content

The speed of deepfake advancement has outpaced defensive technologies, creating a critical window of vulnerability. While some tech companies are developing detection algorithms, cybersecurity experts emphasize this is an arms race requiring coordinated global response.

Comentarios 0

Comentando como:

¡Únete a la conversación!

Sé el primero en compartir tu opinión sobre este artículo.

¡Inicia la conversación!

Sé el primero en comentar este artículo.